The Definitive Javascript SEO Guide [Best Practices and Beyond]

JavaScript has become an integral part of modern web development, powering dynamic and interactive websites. However, its widespread use brings unique challenges when it comes to Search Engine Optimization (SEO). Because search engines historically struggled to crawl JavaScript content, developers and SEOs must now work in tandem to ensure that sites are both fully functional and discoverable.

The Definitive JavaScript SEO Guide offers both best practices and advanced insights to help overcome these challenges. Below, we outline critical components of a successful strategy.

The Basics: JavaScript and Search Engines

Search engines like Google have improved their ability to process JavaScript, but there are still limitations. It’s essential to understand how search engine bots crawl and index JavaScript content, in order to optimize for visibility.

Best Practices:

1. Server-Side Rendering (SSR) or Pre-Rendering: Ensures the initial page load provides all essential content in a form that can be immediately crawled by search engines.

2. Progressive Enhancement: First build a functional website using only HTML and CSS. Then enhance it with JavaScript for users with capable browsers. This helps ensure that the core content is accessible even if JavaScript fails.

3. Hybrid Rendering: Combining client-side with server-side rendering can strike a balance between a dynamic user experience and search engine accessibility.

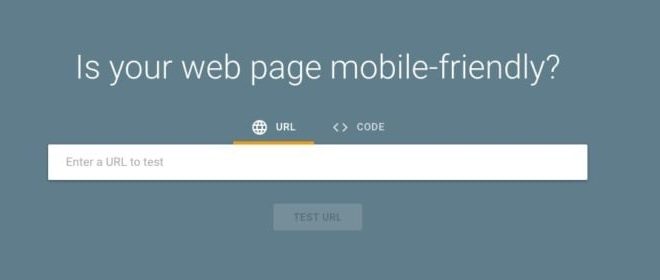

4. Ensure Bots Can Crawl JavaScript: Use tools such as Google’s Search Console and the Fetch as Google tool to ensure your JavaScript content is accessible to crawlers.

5. Avoid Cloaking: Ensure that the content served to search engine bots is the same as what’s presented to users.

Advanced Insights:

1. Lazy Loading Implementation: While lazy loading conserves bandwidth and speeds up initial page loads, it’s crucial for SEO that search engines can recognize lazy-loaded content.

2. Structured Data: Implementing structured data (JSON-LD is recommended) helps search engines understand the content of your pages, which may include dynamically generated content.

3. AJAX Crawling Scheme Deprecated: Previously, Google recommended using AJAX crawling with hashbang URLs for dynamic content. However, this is no longer supported. Modern SEO now relies on supporting navigation via history API pushState.

4. Content Visibility via Document Object Model (DOM): Content should ideally be available in the DOM without requiring user interactions like clicks or scrolls – which may not always be executed by crawlers.

Future-Focused Approach:

1. Headless CMS & APIs: Employing headless CMS solutions could offload SEO responsibility from developers by allowing SEOs direct access to manage metadata and other relevant SEO attributes via APIs.

2. PWA (Progressive Web Apps) Considerations: Since PWAs often rely heavily on JavaScript, consider how service workers and caching might affect the visibility of your content in search results.

Continuous Testing:

Regularly test how your website’s JavaScript affects its discoverability by using tools like Google Lighthouse or Screaming Frog SEO Spider which simulate how a page is processed by a crawler.

Conclusion:

Integrating these best practices into your website’s design and development processes ensures that you don’t sacrifice user experience for crawlability or vice versa. The key is understanding both your audience’s needs and the technical underpinnings of modern search engines – bridging the gap between dynamic functionality and optimal ranking potential in SERPs (Search Engine Results Pages).

As we look beyond current best practices, always keep track of changes announced by search engines related to their processing of JavaScript content. An adaptable mindset will guarantee your strategies remain effective regardless of evolving web standards or technologies.